The lockdown continues to be relaxed and it appears that much of the commercial world is easing back into work, given the number of projects we are discussing, specifying, quoting and beginning. There is a growing acceptance the world has changed and the future is virtual. This was our week.

Toby – Leading From Home-ish

Not really working at home full-time now. But the office is pretty close to home so it’s similar. Happy news to share this week; my courgette plant is courgetting…

In other news we’re approaching a deadline crunch so we will have a lot to show very soon. Working *on* the business, we have a few projects ongoing internally, some of which you’ll see in the diary entries below, as we continually look to improve the way we work and, in terms of the website and some amazing artwork, the way we present ourselves to the outside world!

Josh – Programme Manager

As promised last week, this week I found time to detail my activities. This week we began the next scenario in the Customer Experience VR project, which concerns a broken-down wheelchair adapted vehicle in a live lane of traffic.

These vehicles are incredibly valuable, negotiating the removal of a vehicle like that can be really worrying for drivers, they’re difficult and expensive to fix.

Equally, wheelchair users have specific accessibility requirements and navigating this in a busy motorway environment can be stressful for both the customer and the traffic officer. Our solution is designed to help prepare traffic officers for this kind of situation.

Elsewhere, we began budgeting and preparing planning documentation for an Incident management training solution, that will live inside the Test and Innovation Centre in West Hallam, Derby. This is the most complex project I have worked on and I recognise the planning phase will be critical.

We came up with concepts for an Augmented Reality Marketing Experience for a premium whisky brand, which is a definite change of pace from what we’re used to and I’m confident we’ve met the brief – and it’s nice getting to be a bit more creative with our ideas.

Also implemented this week was a new company wiki that lets us store all our developer documentation in one place, which is handy given all the planning that’s gone on this week.

Cat – Lead Programmer

This week I’ve started doing some deeper research into Unreal Engine to evaluate how we might start to use it as a team. In a lengthy chat among the team, we each shared our thoughts and raised our various concerns about using it.

It was interesting to hear the viewpoints of all the team members, as we’ve all come from different industry backgrounds and have varied experiences with both Unity and Unreal, so I think we have a balanced set of views going into this conversation.

The conversation has been sparked by the announcement of Unreal Engine 5, which appears to offer truly compelling reasons to move away from Unity.

The graphical fidelity is next-generation, as expected, but in my mind the primary technical advantage offered is that the art workflow has entire sections completely removed from it – no more need to bake lighting, or even supposed optimise geometry!

These advancements alone would save the team huge amounts of time, irrespective of the graphical upgrades offered on top. It seems somewhat too good to be true, but raises important questions about the state of Unity in 2020.

In the context of Unreal Engine 4, I’ve been looking particularly at how we might integrate it with the rest of our tooling.

When building out our Unity workflow, a key part of it was a build system I developed to help us integrate Unity with Gitlab, as well as managing scenes, development settings and versioning within the solutions themselves.

As a relative newcomer to Unreal Engine, I was surprised to find that it already has a build system, as well as an array of other automation tools, built right into the engine! There is still a lot of research and testing to do, so the next big step will be to try to establish a test project in order to see how well we can integrate the engine into our workflow.

Sergio – Programmer

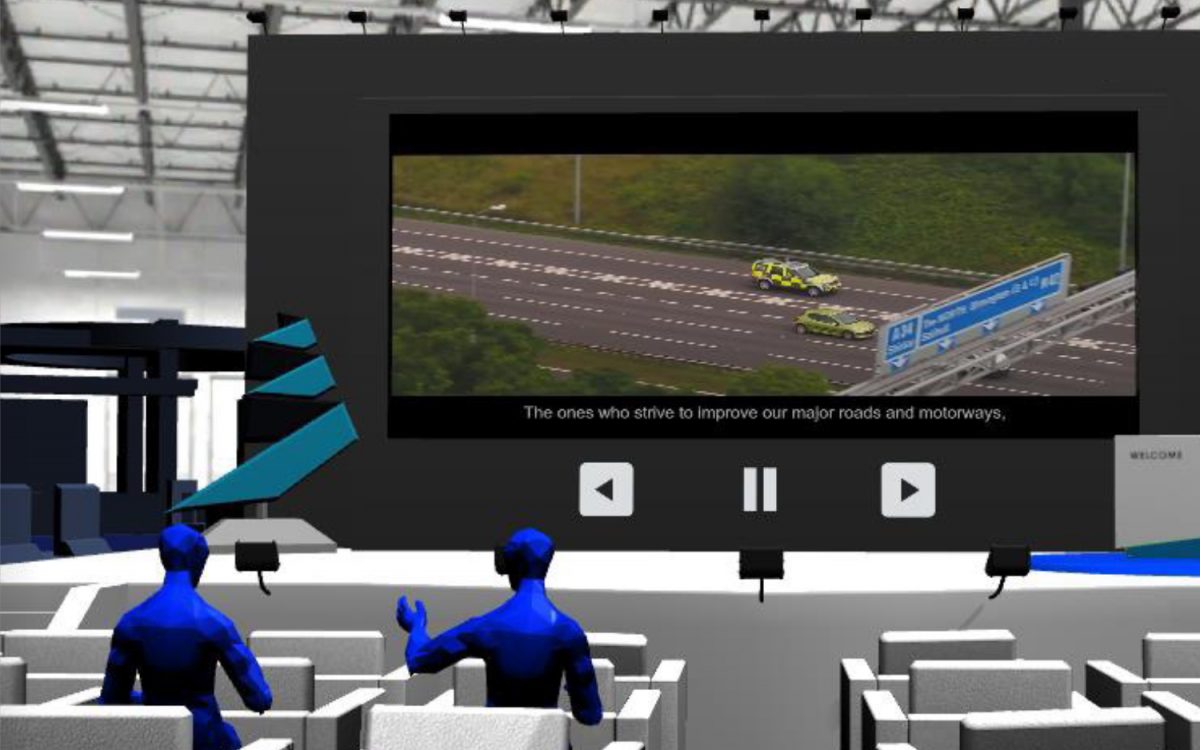

With new task requirements in hand, Kyung and I spent most of the week improving the upcoming Virtual Events experience.

I was responsible for making a video player controller which would allow users to switch between different videos in real-time. I had to account for content loading delays in the browser and therefore implemented a buffer animation to provide a visual feedback.

Aside from that, I worked on an information user interface which would be accessible from within the experience, to provide users with custom information about what they are seeing.

As I am jumping between both Events and our website project, I manage some progress and in the next week, I am planning to start designing the layout and creating the building blocks in code.

Slava – Lead 3D Artist

This week I was working on low-poly version of a traffic officer vehicle for WebGL-based online application. It was tricky task to convert a high-polygonal model of about one hundred thousand polygons into low-polygonal model of just about 3000 polygons, while keeping as much detail as possible both in geometry and textures.

This task consisted of two steps, the first of which was the creation of a low-polygonal shell over the original car, trying to reproduce the same shape as close as possible. The second step was to “bake” original textures into new smaller textures, which should correspond to a new UV-layout.

An important part of this process was the creation of a normal map, which serves to transfer details from high-polygonal surfaces.

Stefano – 3D Artist

Once again I find myself animating wild animals, which often present a real problem on the highways for traffic officers. The most recent recalcitrant ruminant is a cow.

To make a 3D model that moves realistically, I need to create ‘bones’ inside it, mainly referencing to the real skeleton structure of this animal. It’s important to have the centre of rotation of every bone in the right place to create a natural and realistic effect.

To make these bones easy to animate, I need to create appropriate controls.

Each control moves a bone or a group of bones in order to simplify the animation process, also automating some complex behaviours of the skeleton and muscles structure, allowing the animator to be more comfortable with fewer controls.

During this week I’ve also worked on our new MXTreality splashscreen, and that is where I’ve been encouraged the most to unleash all the creativity. An exciting logo animation is coming.

Kyung-Min – 3D Generalist

This week saw the majority of my efforts bringing MXTReality Events space work to a close. With a final motion capture session set up to produce the last set of animations, there’s only a day or so of tweaks left to clean them up and fine-tune them directly into the Events page.

Never a quiet moment at MXTreality and we already have a new, exciting project around the corner – we held a meeting to conceptualise potential approaches.

While I can’t say too much about it now, I can say that it is around Augmented reality and shows a wide range of applications beyond even our initial brief. Jumping from VR into Open GL and AR the next day is very exhilarating and feels like I’m moving between different dimensions.

We are also looking at expanding our software development library beyond just Unity to bring in potential options, beginning with the behemoth Epic games, so not just Unreal Engine 4 but the upcoming Unreal Engine 5.

While there is much excitement around the graphical fidelity on the trailer they released, my interest was focussed more on what it would mean for the graphical gap in Virtual reality.

What we can currently do is limited to about 50% of what the current top-end games can produce, as we must render everything twice through two cameras. But by changing the fundamentals of how these images are processed, so that billions of polygons can be directly loaded, it has been speculated to be using an approach much like with voxels or seen with point clouds data!

If correct what this could mean for VR is instead of creating one scene and having to render it from 2 angles we could simply create the environment twice and display the different angles of the same environment on each screen removing the graphical gap or performance.

Because currently much of our work revolves around optimisation of assets to offset the performance costs of VR, this would change our workflow and unload a huge amount of work off the art department, allowing us to focus on making things look good rather than making them run.

Working to get the best results now, but also taking time to prepare and build for the future is always a consideration, so it been exciting to have time to discuss and study potential options.

We have all experienced corporate meetings that involve mostly statistics and projections, but I’ve realised here that a lot of the freedom to experiment and make decisions is left to us creators and programmers.

This ensures, when we are called for a meeting or to discuss a new brief, we all approach it with a sense of excitement, energised by the new project. So, keep your eyes peeled as we build up to our next AR project, I’m confident you will be impressed!

Jordi Caballol – Programmer

This week I’ve added the last details to the game and moved on to new projects. Specifically the Scenario 4 for our traffic officer VR.

For the scenario I’m setting up all the usual stuff in the scene. Thanks to having developed the previous scenarios, we already have almost everything we need and it’s now just a matter of using it all to create the new scenes.

But, there is a more interesting part in this Scenario 4: they also want a smooth transition when we load the scenarios, so we are going to try to recreate the holodeck from Star Track. This week it has been all about researching how to do it and next week it will be time to implement it – can’t wait!